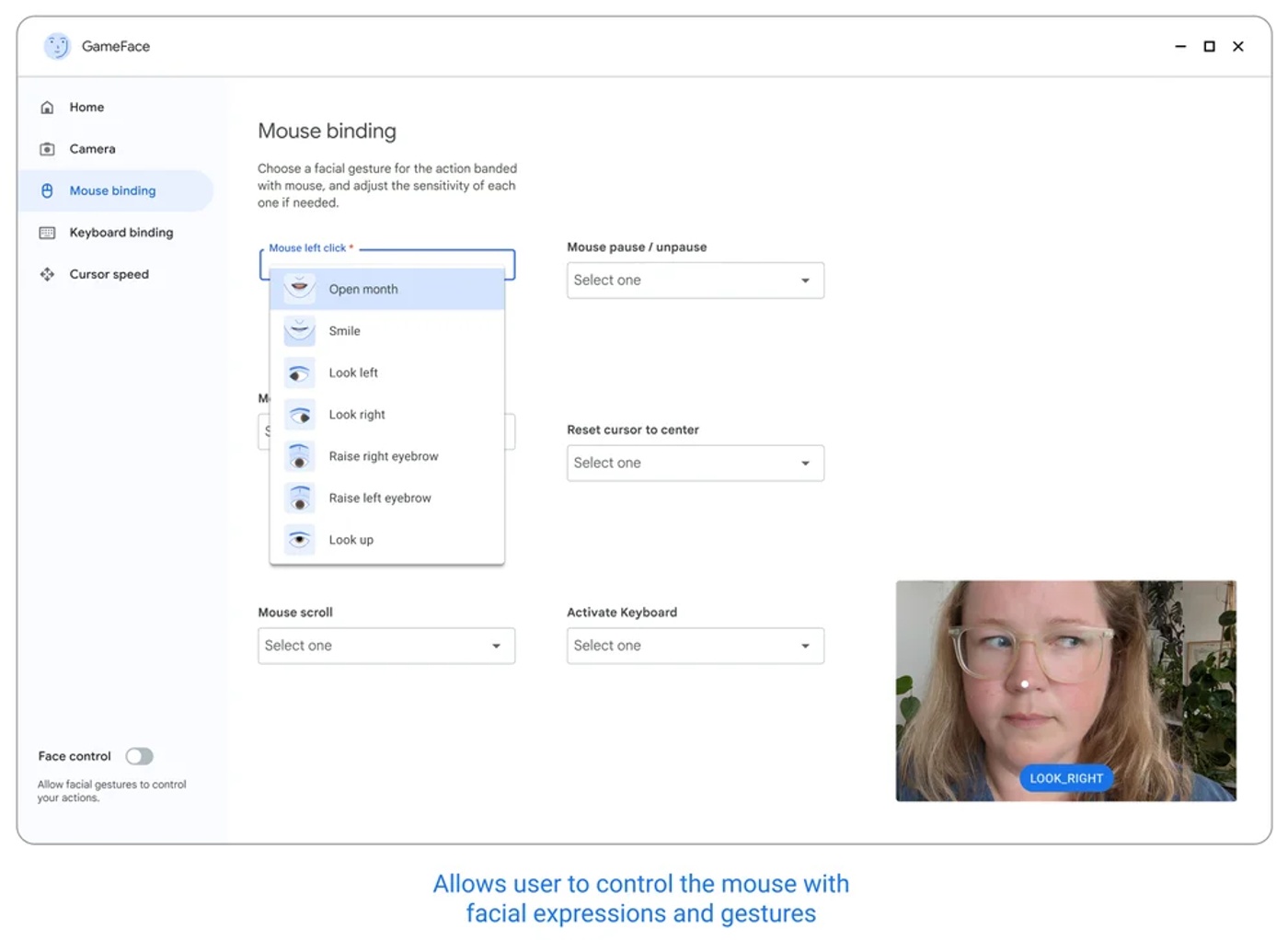

Google continues to come up with remarkable innovations at its annual developer conference I/O. The main highlight of this year’s conference was Project Gameface, which allows you to control Android apps using facial gestures. This technology was first introduced for desktop devices, allowing users to move the cursor with facial gestures. It was announced that the feature announced for desktop last year will be adapted to the Android platform this year.

Applications on Android Platform Will Be Controlled with Facial Gestures

Project Gameface uses Google’s MediaPipe and Face Landmarks Detection API to detect 468 different points on the face. Detected facial expressions and head movements are converted into cursor movements to control apps on Android devices. For example, users will be able to perform right or left clicks with facial expressions. While this feature was initially developed as an accessibility tool for gamers, it has the potential to be a great help for people with reduced mobility.

New Opportunities for Android Developers

This innovation is open-source and will be available to Android developers, allowing them to integrate this technology into their apps. Thus, users will be able to manage applications only with facial expressions without the need for any physical contact. Google aims to bring this technology to a wider audience and further improve the user experience.