In recent years, technology giants have been working to increase the use of artificial intelligence (AI) in mobile devices such as smartphones and tablets. Meta has taken a pioneering step in this field by developing an artificial intelligence model, MobileLLM, specifically designed for devices with limited capacity. Unlike large AI models, this model occupies much less space, allowing it to operate efficiently on mobile devices.

The Meta Reality Labs team, in collaboration with PyTorch and Meta AI Research (FAIR), worked on an artificial intelligence model containing less than 1 billion parameters. When compared to massive models like GPT-4, the MobileLLM model contains only a thousandth of the parameters. This provides a significant advantage for devices with limited storage space.

Miniaturization of Artificial Intelligence Models!

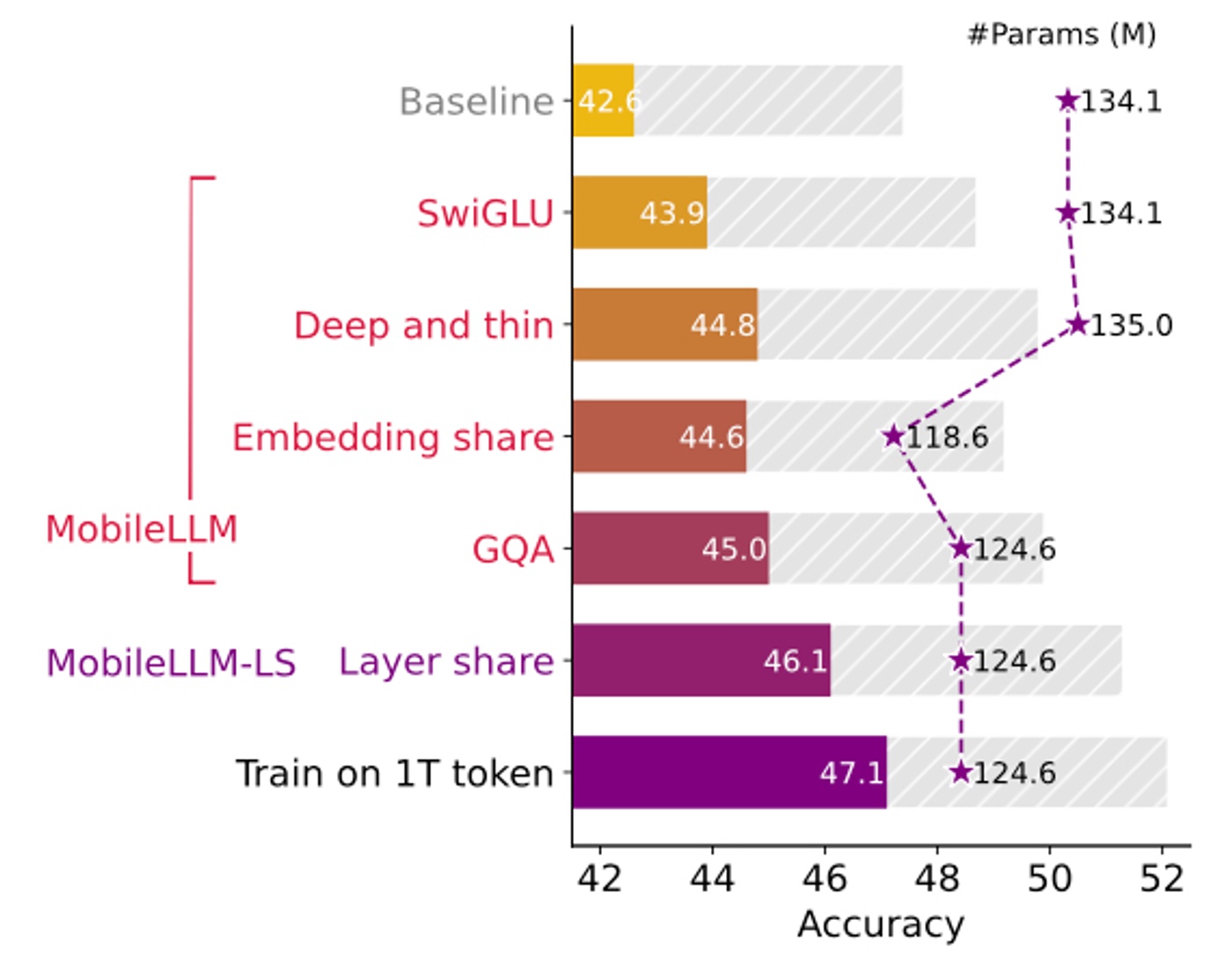

According to Meta’s chief AI scientist Yann LeCun on the X platform, the MobileLLM model is equipped with deeper structural features and offers high efficiency through weight sharing techniques. Notably, techniques such as embedded sharing, grouped queue querying, and blocked weight sharing are among the model’s standout features.

According to research results, MobileLLM achieves similar performance levels to the 7 billion parameter LLaMA-2 model with only 350 million parameters. This demonstrates that the model does not compromise on performance while reducing its size. MobileLLM is seen as an important step in making AI usage more accessible on mobile devices.